SpotNLP

A Natural Language Interface for Boston Dynamics Spot

GitHub Repository

Overview

This project involves developing a natural language interface for the Boston Dynamics Spot robot, enabling users to command the robot using everyday language. By integrating large language models (LLMs) and gesture detection with Spot’s control systems, we aim to facilitate intuitive interactions and enhance the robot’s usability in various environments.

Human Following / Gesture Recognition

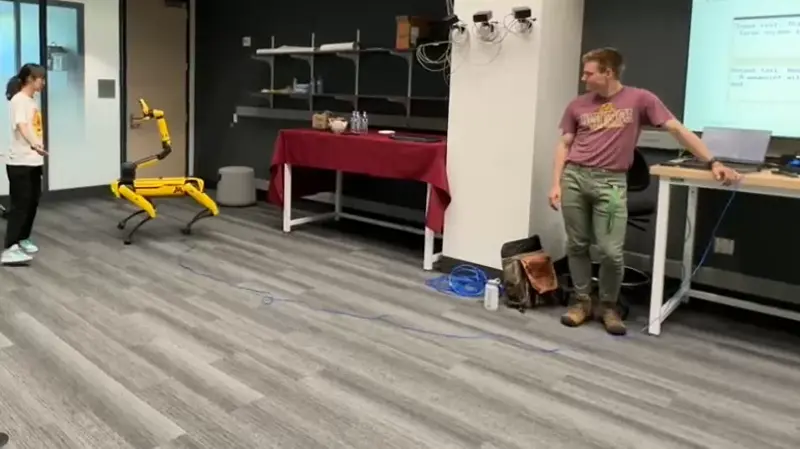

SpotNLP in action: Spot Following natural language commands for a Demo for DoD representatives. Spot is instructed to follow the controller, save semantic keypoints, and navigate given contextual information about the environment.

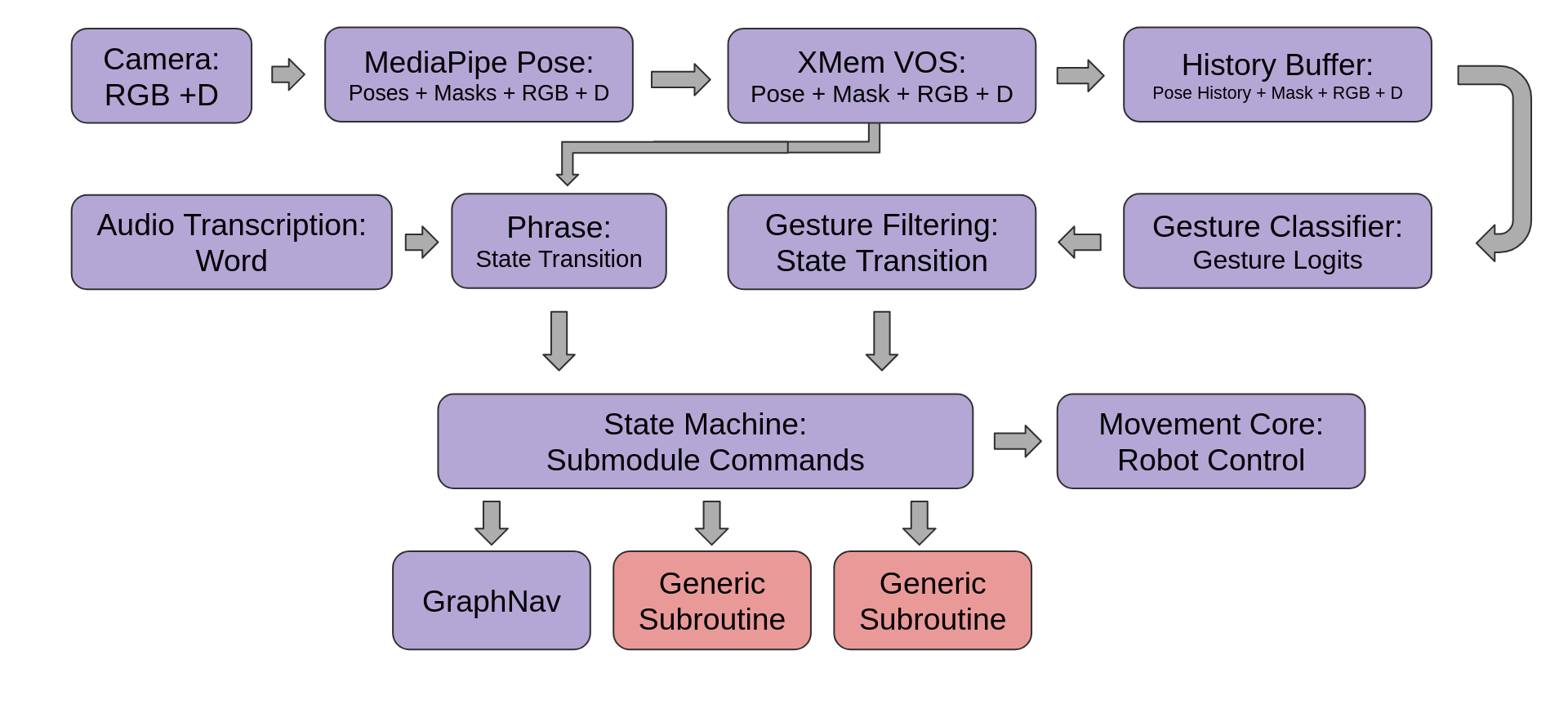

Spot follows humans using segmentation models. XMem is used to propigate the original segmentation mask throughout time, leading Spot to be robust to distractions in crowded environments and occlusions. Spot also recognizes simple gestures to start and stop following.

MediaPipe creates a segmentation mask of the first human to make a T pose. XMem is used to propagate the original segmentation mask throughout time, leading Spot to be robust to distractions in crowded environments and occlusions. Even with multiple humans in the scene, Spot can follow the controller, even after they are completely occluded.

SpotNLP can also recognize simple gestures to start and stop following. The controller can make a T pose to start following, and use a "stop" gesture to start or stop following. The robot also allows the user to change the following distance by using one of three gestures: "close", "medium", and "far". SpotNLP uses MediaPipe to detect the poses, that are combined into a history vecotr, passed to a MLP model trained to detect actions. By using MediaPipe, no additional training is required to pair with new users, so the robot can be used by anyone without additional setup.

Natural Language Interface

SpotNLP enables natural language commands for long-horizon tasks. In this demo, the user conversatonally instructs Spot to follow them, save semantic keypoints with contextual information about the environment, and navigate to those keypoints later. The user can also ask Spot questions about the environment, and implicitly reference previous keypoints using context from the semantic map. Future work includes detection of pointing gestures to enable more precise referencing of keypoints, and integration of manipulation capabilities using Spot's arm. The more capabilites we can give Spot, the more useful the natrual language interface becomes.