Zero-Shot Robotic Manipulation

We predict video sequences of robot interactions using pre-trained video diffusion models and use these predictions to plan actions.

Overview

We predict video sequences of robot interactions using pre-trained video diffusion models and use these predictions to plan actions. This enable zero-shot manipulation policy learning approach that leverages large-scale video diffusion models without any additional training.

We utilize pre-trained video diffusion models (WAN) to generate future video frames conditioned on the current observation and a text prompt giving the desired action. We then use these predicted video sequences to plan robot actions with a Diffusion Policy. This approach allows us to perform various manipulation tasks without any task-specific training, demonstrating the potential of large-scale video diffusion models for zero-shot robotic manipulation.

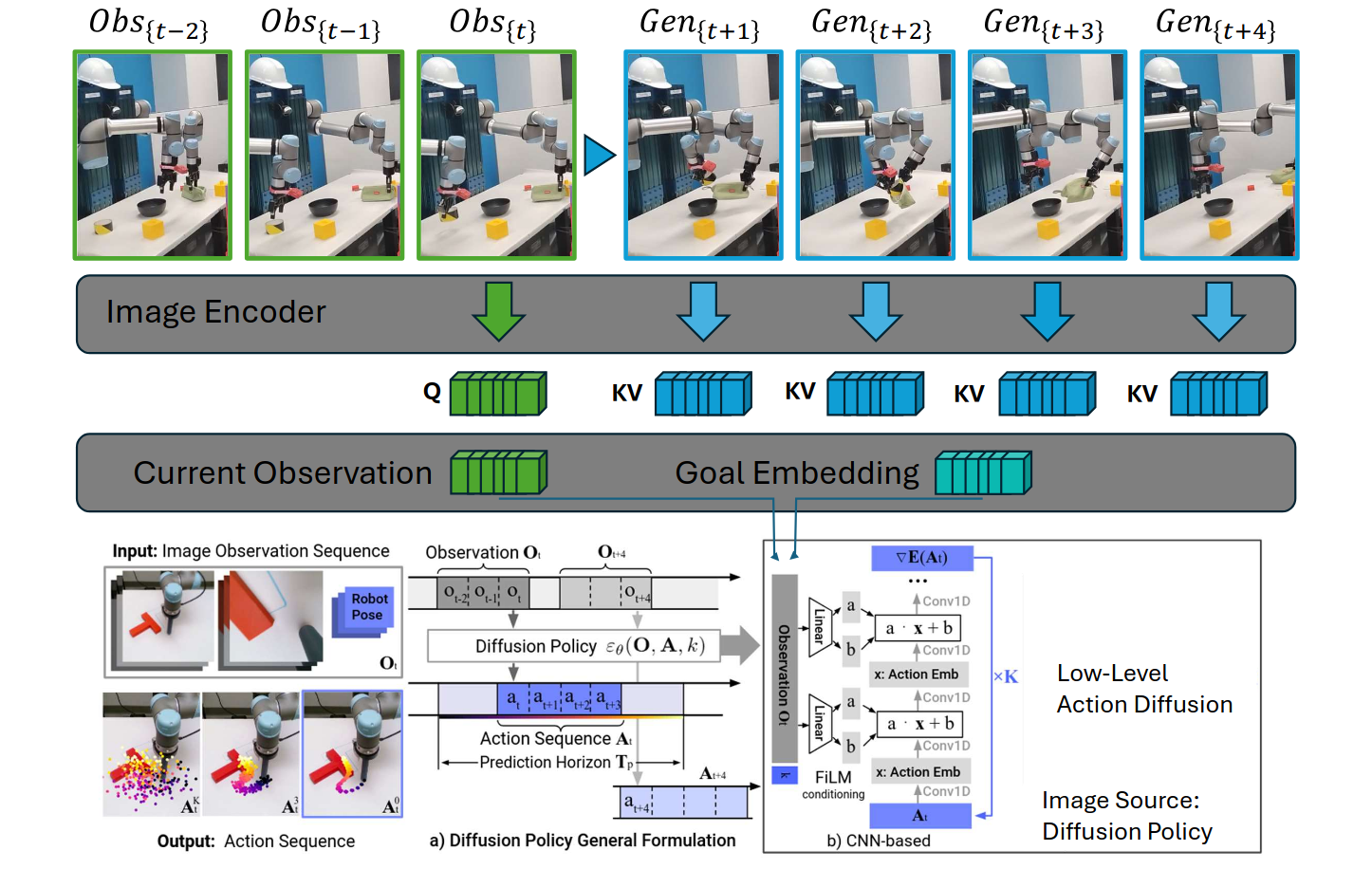

The Diffusion Policy takes as input the current observation, along with a feature that encodes the predicted video frames from the WAN model. The feature is generated by using the current observation as the query and the predicted video frames as the keys and values in a cross-attention mechanism. The Diffusion Policy then generates a sequence of robot actions that are expected to lead to the desired outcome as seen in the predicted video frames.

Proposal PDF

For more details, please refer to our project proposal.